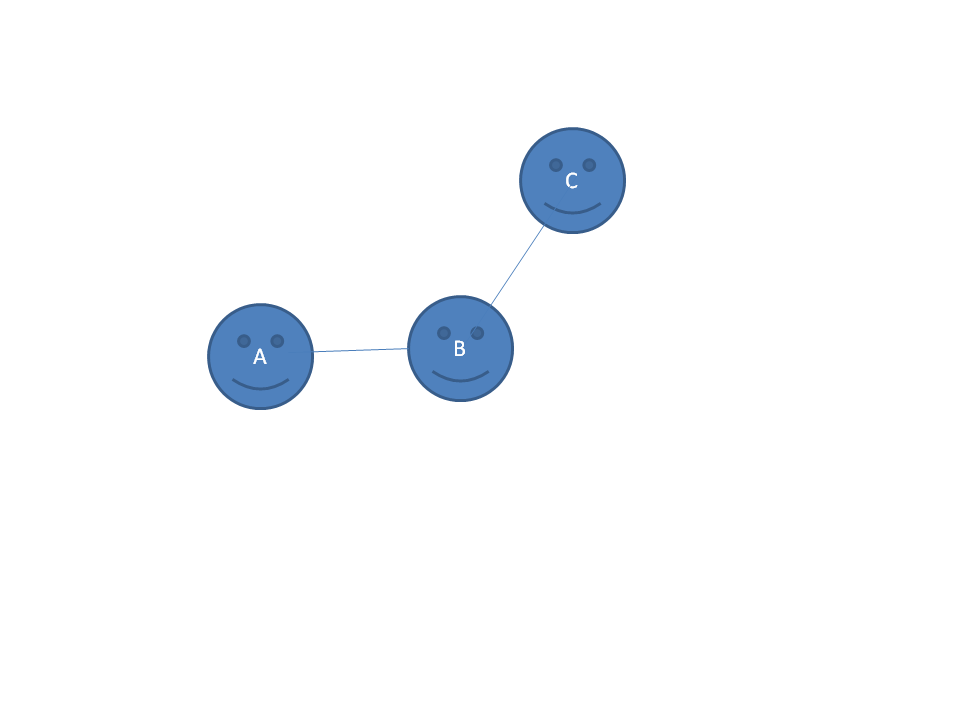

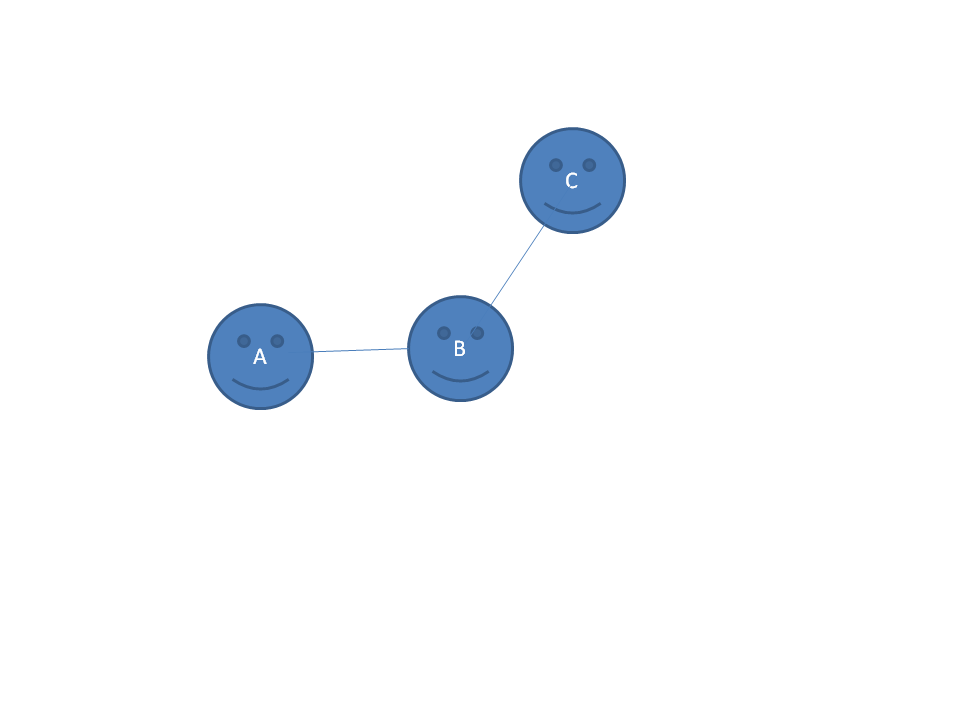

Figure 51. Remember that we tried to optimise the position of the three atoms in this small molecule? Clearly, this is another FF application using the same data.

One of the main complicating factors in the discussion of FF is that there are so many different types of FF 'around'. For example, the WHAT_CHECK software that we have used to look for problems in protein structures probably uses more than fifty different FF.

The second complicating factor is that FF can be used for very different purposes, and they can have widely differing degrees of complexity. The Engh and Huber FF for protein structure validation is a very simple FF.

Question 50:

Let us think about the definitions of the E&H FF. And please, be aware

that these are very, very simple questions!

1) What is a Z-score?

2) What is the data E&H used?

3) In the case of structure validation, what is the algorithm they used?

When working with a FF you need to think about the null-model . The null-model is a prediction of the data in case everything is random, so when there are no deviations from the expected value. In case of the E&H FF the null model is that the bond lengths in your protein deviate randomly from the ideal values, and on average (term used loosly) they deviate as much as you expect from the E&H standard deviations. So, if you have the same kind of errors (and errors does not mean that people made errors, error here means nothing more than deviations from the average caused by for example the chemical environment, poor data, etc.) in your protein as E&H observed in the CSD, then you have the same distribution as E&H, the RMS Z-score is 1.0, and there is nothing to be said about your protein. The outcome is as expected. If, however the RMS-Z differs from 1.0, or if more than 1 in ~10000 bond lengths deviates by more than 4 standard deviations, than you have a deviations from the null model, and you have signal that might lead to a validation warning.

Now the next step.

|

Figure 51. Remember that we tried to optimise the position of the three atoms in this small molecule? Clearly, this is another FF application using the same data. |

First, let us think about the goal. Do we want to achieve that all bond lengths are perfect? Or do we want the RMS-Z score to be 1.0? This point depends on what we are doing. If we just built a homology model we have no data that can tell us anything about potential deviations from perfect, so we can just as well try to get everything perfect (i.e. RMS-Z = 0.0). But if we are crystallographers and we have real experimental data, then the amount of data tells us how much we can allow the RMS-Z score to deviate from 0.0. Perhaps this is a bit complicated, but don;t worry, this is not exam-material. the following question, though, is...

Question 51:

Let us think about this second application of the E&H FF. And please,

be aware that these are again simple questions!

1) Why can we not get all bond lengths perfect by shifting atoms around a bit?

(hint, how big is the average protein? How many bond lengths do you thus,

roughly, expect in the average bond length? And how much does each bond length

depend on each other bond length?)

2) What is the data E&H used?

3) In the case of structure optimisation, what is the algorithm we use?

4) Does this FF application have a null-model?

The first two examples have shown that the same data can be used for different FF applications. We also saw that not every FF has a (needs a) null-model. |

Not all FF need data. Lets think of a 'Force Field' to validate a dice (dobbelsteen in Dutch).

Question 52:

Remember that FF questions always are easy!

1) What is the null-model for the dice validation FF?

2) Why don't we need data to arrive at that null-model?

3) What is the validation algorithm?

This example showed that you can have a FF without underlying real data. Other FF that do not use data, or use it very, very indirectly are quantum chemistry or Molecular Dynamics (MD). |

At present we don't know enough about the relation between the protein sequence, structure, function, and dynamics yet to calculate even the simplest of things in any ab initio fashion. This problem is hurting us because we need to know many things about proteins and their structure and function for fields as diverse as drug docking, human genetics, or bio-fuel enzyme engineering.

That is why bioinformaticians design FF about every day to predict all kinds of things about proteins. We have already discussed two FF of this type. FF1 dealt with the prediction of transmembrane helices, and FF2 with the prediction of secondary structures. In both cases we started by collecting protein structures. These were structures of proteins with transmembrane helices in them for FF1, and simply proteins with a known structure for FF2. For both FF we counted for each amino acid its occurence in the whole dataset, and its occurence in the states we wanted to predict. In the case of FF1, we only needed to count for each amino acid type how often it occured in the whole data set, and how often it occured in a transmembrane helix because the chance that any amino acid type does not occur in a helix simply is 1.0 minus the chance that it does. In FF2, the secondary structure FF, we needed to count a bit more because each residue type can have three states, so we cannot simply say that the chance for one state is 1.0 minus the chance for the other.

Question 53: Let us start with the null-model. Take a look at The amino acid data page, and go for 1.1, Chemical structures. As you will see, this page also holds a table called occurence, and that is the percentage of each atom in all proteins we have sequence till today. For any sequence based force field null-model, we need to predict at some stage how many amino acids of any type we expect. So, let us assume I have a nicely representative dataset of 42133 proteins that together hold 1003791 amino acids. How many of those do you predict to be Ala? So what is the Fpred(Ala)? And the Fpred(Cys)? (These questions are indeed as simple as they seem).

Answer

Question 54: So, if I observe in any FF calculation 10129 Alanines and 4331 Cysteines, which of the two is mor favourable?

AnswerSomebody counted for the same, very big set of data that resulted in the aforementioned amino acid occurence table the number of residues in a helix, and that was 345003.

Question 55: So, if I pick any random residue, how big is the chance that it is helical?

Answer

a) And, how big is the chance that when I pick a random residue, that it is

an Alanine in a helix?

b) So, how many Alanines do you expect there to be in a helix in the whole

dataset (assuming that you know nothing about preferences; so in your

null-model)?

Question 56: So, if I am making a helix prediction FF and I find 30334 Alanines in a helix, does that mean that Ala is good for a helix, or bad?

AnswerBack to our two force fields. For both FF1 and FF2, we need to extract a null-model. This null-model must produce for each residue the distribution over the states as if there is no signal, no asymmetry.

So in both cases you must calculate the chance of finding residue type X in state Y, and from that the predicted frequence FpredP(X,Y). If 7.3% of all your residues are Ala, and 20.1% of all amino acids are in a TM helix, the chance of Ala in a TM helix is 7.3% of 20.1% (which is 1.47%). If your whole data set has 119234 amino acids, you expect 1750 Alanines in a TM helix. And once you calculate that for all 20 amino acid types, you have your null-model.

Question 57: What is the null-model for FF2?

Answer

So, we have now seen that in many cases the null-model simply is the chance that you observe something multiplied by the number of things you can observe. So the null-model for Ala in a helix Fpred(Ala,H) is the change P(Ala,H) * the number of amino acids in the data set. |

So far we have only looked at the null-model, and not at the complete FF yet. before we look at the complete FF, let us look at a few radically different FF.

Force fields are everywhere. In the movie The Elevator, an intelligent elevator goes beserk. The idea to make an elevator smart so that it spends less electricity and gets people faster where they want to be is of course based on a FF. You might want to discuss the data and the algorithm. Also, does this FF have a null-model or not?

The water level in the Waal / Rijn is important for the transport sector, for farmers, and for the government that watches over our dry feet. At this site (in Dutch) you can see that they also give predictions for (near) future water levels.

Question 58: Two questions. 1) Do you think that this prediction is based on a FF? 2) Can you come up with two radically different FFs that can make this prediction?

Answer

Back to serious bioinformatics. In case of FF1 and FF2, we have the null-models. What comes next? Well, next we need to find if we observe things that are different from the null-model predictions. So, for each null-model frequency Fpred(Ala,TM), Fpred(Cys,TM), etc for FF1, and Fpred(Ala,H), Fpred(Ala,S), Fpred(Ala,R), Fpred(Cys,H), Fpred(Cys,S), Fpred(Cys,R), etc for FF2, we need to find the corresponding Fobs(state). And once we have those numbers, we can calculate for each of the 40 states for FF1 (20 amino acid types, each in TM or not in TM) and 60 states for FF2 (20 amino acid types, each in H, S, or R) whether we observe about what we predict (so no signal), or that we either observe it more than we predicted, so that state is biophysically favourable, or less than we predicted from the null-model so that that state is biophysically speaking unfavourable.

After all the counting (and I did the math for you, so don't worry), we found that in FF1 Fobs(Leu,TM)/Fpred(Leu,TM) = 1.42. What are we going to do with this number?

And now comes Boltzmann to the rescue. Let us do some simple chemistry. I have the molecule A. And A can convert into B at a speed of 10-4sec-1. B, on the other hand can convert into A, but like the sheep in the demo (...), B to A is a bit slower, it goes at 10-3sec-1.

Question 59:

Now, if I have a tube that contains water with [A]=11.0 nMol/lit. And I wait

several minutes (perhaps even while shaking the tube), 1) what will the

concentrations of A and B be?

and 2) calculate the energy difference between A and B.

This A<->B example shows that energy and chance are very related things.

In bioinformatics we don't need to stick to chemistry, we also can do alchemy to turm lead into gold (and some of us start companies and turn that concept into Gold!). We can use Bolzmann's law when we don't have an equilibrium, and when we use it, we don't even need -RT in alchemy!

So, when we see that Fobs(Leu,TM)/Fpred(Leu,TM) = 1.42, we can immediately say that the energy difference between (Leu,TM) and the null-model is ln(1.42) = 0.35; so Leu is worth 0.35 kCal/Mole in a TM-helix.

Obviously, if Leu is worth 0.35 kCal/Mole in a TM-helix it is worth -0.35 kCal/Mole in a nonTM part. Now, keep in mind that in chemistry ΔG is negative if 'it is good', in bioinformatics we tend to not call it energy (so we can still enter a bar when chemists or physicists are present) but we call it a 'score' instead, and then a positive score is good.

And, after a bit of math, we have pseudo energies, or scores, for each of the 40, respectively 60 states. What can we do with those energies?

Boltzmann's law can be abused to convert counts into pseudo energies |

So far, everything is rather straighthforward. We could at most start fighting over the dataset used for the null-model in FF1 (should we take the non-TM rest of the TM molecules for the not-TM counting, or should we take other, guaranteed non-TM proteins?) but the dataset for FF2 seems nearly free of discussion.

Question 60: A mean exam question, that I most likely won't ask, would be to find some good reasons why the dataset and null-model for FF2 aren't that straightforward as I make it sound.

AnswerClearly, any algorithm needs to be based on the pseudo energies, while remaining biologically meaningful. There is no need to predict TM helices of less than 20 amino acids long.

Question 61: Why does it not make sense to predict 10 residue long TM helices?

AnswerSo, we could check if we can find stretches of 25 amino acids in a row that have an averag positive TM score (positive pseudo energy). And probably we would like to NOT have a few very bad ones in a row in the middle.

Question 62: How can you figure out what is an acceptable number of bad TM residues in the middle?

AnswerAnd now you write the software that puts things together.

|

As The A-team's Hanibal always says "I love it when a plan comes together!" |

Obviously, there are many, many ways you can design an algorithm. And this is the moment that you start seeing the difference between dumb and smart bioinformaticians.

This is a course in generally applicable concepts and principles, and not a course in applied bioinformatics. So, for the exam it is enough to realise that the algorithm is the weak spot in the whole story, because you will never know whether you found the smartest algorithm or not.

The prediction algorithm is where the human brain becomes important. It is therefore also the weak-spot in the whole approach. |

Obviously, any method that we design must be tested. And that, actually, is the trivial part...

Question 63: How would you test FF1 and FF2?

Answer